Andrés Almeida (Host): Welcome to Small, Steps, Giant Leaps, the NASA APPEL Knowledge Services podcast. I’m your host, Andrés Almeida. In each episode, we dive into the lessons learned and experiences of NASA’s technical workforce.

NASA has been safely using artificial intelligence for decades. It’s used for planning deep space missions, analyzing climate data, and even diagnosing problems. Now we’re in a new era where it’s possible for AI to be used by more and more people across NASA. While streamlining decision-making and saving resources, AI could leverage the full potential of the workforce.

With us for this episode is Ed McLarney, NASA’s lead for digital transformation and machine learning.

Host: Hey Ed, welcome to the show.

Ed McLarney: Well, thanks very much. I’m glad to be here.

Host: What is NASA’s message surrounding AI?

McLarney: Well, NASA really wants to make the most of AI while doing so in safe, secure, responsible and respectful manner, and truthfully, that reflects the guidance that we’re getting from the White House and from the Office of Management and Budget and federal directives that are coming down. So we’re embracing those federal directives, and we’re also figuring out what that means to NASA, and how we can approach AI in the most positive manner, while also being careful.

Host: And what are NASA’s current AI guidelines? What AI uses are not permitted, and what are the policies that restrict NASA’s use of AI?

McLarney: One of the things that our chief information officer really stressed, as generative AI became available, was that we need to lean on existing policies and procedures as this as the AI space continues to evolve. In May 2023, a group of us worked with the chief information officer to put out initial guidance based on existing policy for NASA use of generative AI that includes the ability for NASA workers to experiment with non-sensitive public data, so data where there’s really no threat, using personal accounts on external AI capabilities. And that might sound pretty restrictive, but truthfully, there are a lot of things that you can experiment with that are out in the public domain.

For example, many of NASA’s publications or policies are in the public domain, and different groups have used openly available AI capabilities to help explore those or interact with those in chatbot fashion. What we can’t do for the time being, until we get systems approved for more sensitive kinds of data, we can’t use internal, controlled, unclassified information or internal sensitive information in unapproved systems.

Now the cool thing is, NASA is on the cusp of approving our first FISMA Moderate so this is a security kind of classification or categorization where we’re working on our first capability for FISMA Moderate data, which will be Microsoft’s Azure, OpenAI. OpenAI is the company that makes ChatGPT, and this is the way that they’re bundling it through Microsoft Cloud Services to make that available for us. So once that becomes available, we start lifting some of the prohibitions regarding sensitive internal NASA data. So it’s neat to see the technical progress, and the guidance will be updated to reflect that technical progress. How

Host: How does NASA currently safely use AI?

McLarney: So another element is NASA’s got a whole variety of existing quality control processes, system engineering reviews, engineering management boards, cybersecurity reviews, supply chain reviews. And NASA has a really great culture and heritage for excellence in engineering and the scientific process. So that culture and all of those existing processes need to apply to AI. All of us need to think about, “If I’m adding AI to the mix, do those existing processes and procedures cover the AI considerations? Or are there additional considerations for AI that we need to bring into the mix?” So that’s something that we’ll all learn together.

But to me, NASA’s workforce is an incredible set of professionals, and in any profession, part of your job is to responsibly use the tools that you have available to you. AI is no exception. Wherever possible, we need to use AI as an emerging tool in concert, or, you know, in accordance with our existing culture and professions.

Host: So there might be a misconception that NASA is new to AI, or hasn’t used AI before. Is that true?

McLarney: Well, I’ve met a wide variety of people who joined NASA 30 or 40 years ago, and their Ph.D. was in artificial intelligence, and they’ve been driving AI into specific NASA solutions along the way, along that entire time frame, and over the years, there has been a lot of, sometimes, there’s kind of this hype cycle where there’s been a lot of promise for AI maybe 30 or 40 years ago, and there were difficulties in implementing some of the things that were theoretically possible.

But those experts continued to either thrive in helping AI make progress that entire time, or maybe delving into other scientific areas, research areas, and then coming back to AI, where there’s now really a resurgence, resurgence of AI, or even you might consider it that AI technologies that are, that are coming aboard now are making AI really available in widespread form. And so, those experts who have used it forever, maybe they get to use it even more now. The rest of us, who AI was never an option for, it’s pretty neat to see us be able to get into it and use it.

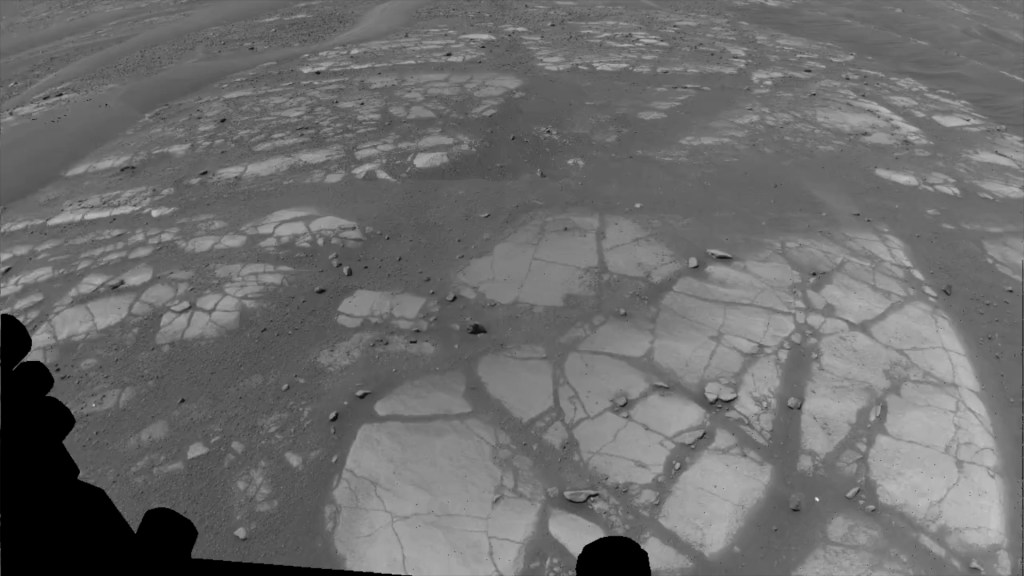

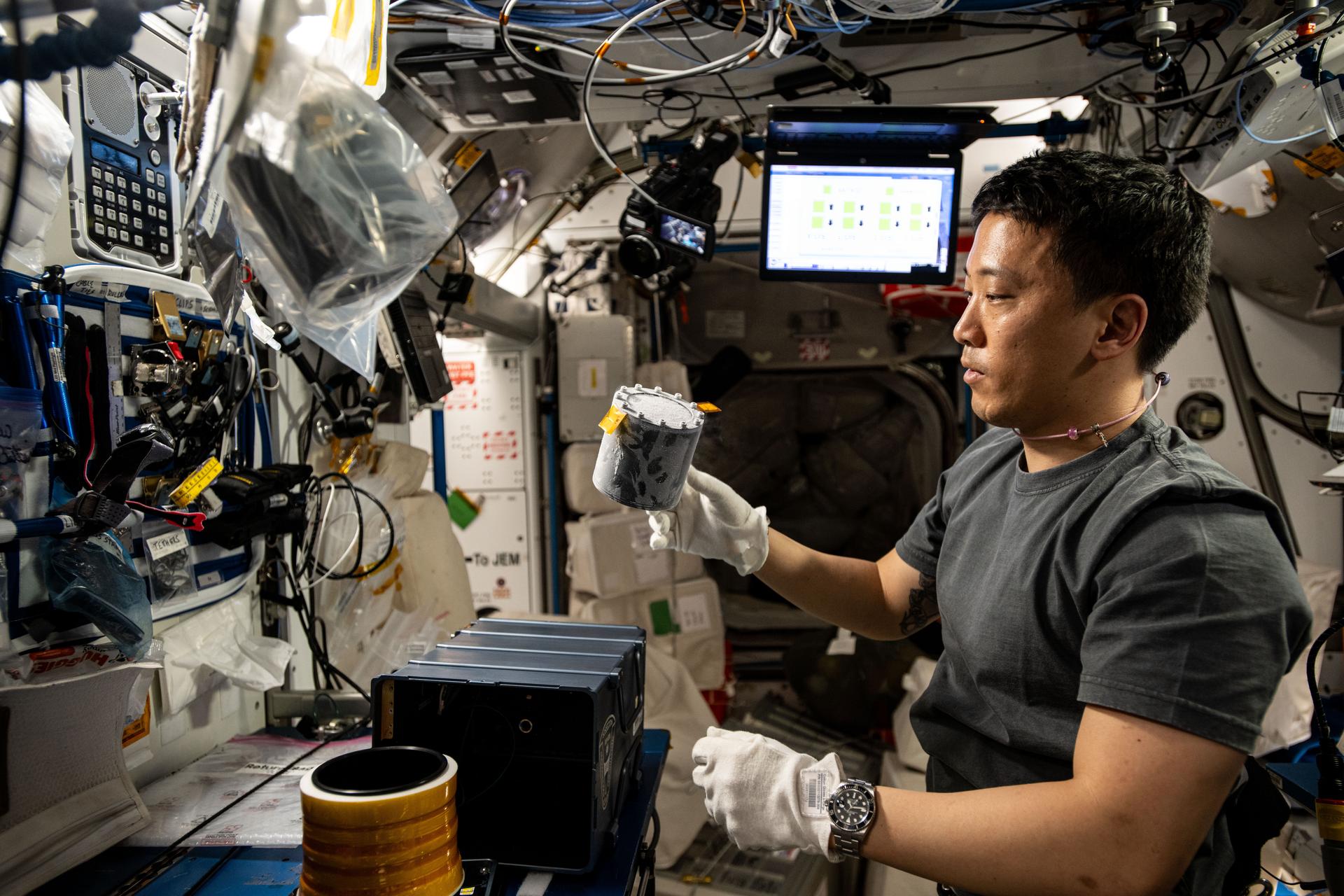

But yeah, NASA has been using AI for a long time. Think about autopilots and aircraft. Mars rover navigating, you know, from one waypoint to the next autonomously. Many, many, many examples. The Science Mission Directorate has a capability called TESS [Transiting Exoplanet Survey Satellite]. That capability has used the fluctuation in the light from distant stars of doing machine learning analysis of that light fluctuation, to hypothesize or to detect that planets are actually traversing between the distant star and us, and they’re finding multiple exoplanets, and sometimes even they think they’ve found multiple stars orbiting one one another, so the Three-Body Problem and far beyond.

Host: What is something that NASA is trying to achieve with AI? What sort of trends and data has NASA uncovered?

McLarney: For our scientific purposes, there have been AI researchers or AI engineers working those angles for many years, but we think for our business functions or our mission support functions, human resources, finance, legal procurement, we think there are, there are trends in the data that AI or machine learning might help us uncover that were either difficult or impossible to find before. Beyond just finding trends, we think that AI may become an assistant to help with many of those mission supporting processes, and so where it can act as an assistant. Maybe it will free up the human being for higher cognitive load capabilities and take over some of some of the repetitive workers, or some of the things that don’t take as much brain power and are currently take time. So if AI can lift us out of there and give us all superpowers, that’d be pretty amazing.

Host: That’s an important point, because there’s also the idea that AI has the potential to stymie creativity. Is that necessarily true?

McLarney: Like anything, any given technology, if you use that technology as a crutch, maybe it can serve to let those quote-unquote muscles atrophy, right? If you use it as a creative crutch, maybe it’ll, your creativity could falter. But if you use AI, you know this be just becoming to be able to use like this, if you use it as an amplification or an augmentation of your abilities, let AI do the easy stuff. Let AI do the repetitive stuff, then the human being gets to focus more on the creative aspects. Then, to me, I see AI as more of an amplifier or an augmenter to your creativity, rather than a replacement for it.

I happen to be a songwriter by hobby. And, you know, I know there’s a lot of buzz about generative AI being able to write lyrics or or generative AI to be able to make chords and melody and backing instruments, but I would argue that there’s a long way to go between those nascent capabilities and something that really grips you by the heart as powerful art. So I think creativity is going to just be augmented by machines rather than replacing our human creativity.

Host: How can the workforce get involved?

McLarney: So, we did have a summer focused-learning campaign for AI, was called the Summer of AI. A couple of amazing leaders ran that for us, Jess Diebert and Krista Kinnard. They were able to coordinate over 40 events all across NASA. Different centers, different organizations were running, running those events, and they were willing to share them with one another, which was pretty amazing.

As a part of that, we had over 4,000 unique learners participate in one or more event. Some of those events might have been a lunch-and-learn all the way to a several-day workshop on how a given mission or center was embracing AI. So, pretty amazing to do that and what I really liked about that was just seeing all the enthusiasm all across the agency for different people and different organizations, different centers, to run events, or to do a do a speaking engagement or conduct a class, and the willingness to do it together. And again, Jess and Krista coordinated it, and it was contributed to by a wide variety of people.

So the thing is, though, the summer is over, but our AI workforce development training, training efforts are not over. So in, in our learning platform, it has a content engine – all kinds of technical training in it for artificial intelligence, collaboration, systems, machine learning, Internet of Things, any given technical area that is coming along in the world, software coding, and any of those kind of things are available in in our learning platform. So that continues to be available as an individual resource, that you or I could go take a class there this afternoon. And I know that many organizations and centers are going to keep doing different events too.

So you know, well, Summer of AI is over, but long live Summer of AI. I’m sure we’ll be doing even more learning over the years. Something I saw in a kind of industry forum several years ago really caught my eye. And the idea was, if you want to make a transformation happen in your organization, educate your people or let them educate themselves and they’ll demand the transformation. They’ll make the transformation happen. And so, where we can do that for AI, there’s a lot of potential there.

Host: So do you find yourself working with other agencies as well to learn from one another?

McLarney: Yes, part of the federal directives that came out, so Executive Order 14110, came out last October, Office of Management and Budget memo 2410, came out this last March. They encourage collaboration across the federal government. In fact, the executive order coming from the White House really encourages nationwide AI leadership. So not just in government, but government, industry, academia, private citizen, and really encourages the United States to pursue continued AI leadership while doing so safely and securely. The executive order actually puts additional emphasis on safety and respecting our rights. Both of those things are really key.

As we pursue our AI approaches, you know, making AI far more available across the agency. We’re also making sure that we do that in in accordance with those truthfully, they’re consistent with our culture again, and it’s the way that our top-level leaders, all the way up to the president, want the nation to pursue it safely, securely, respectfully. All of that kind of nests together really nicely.

Host: How does NASA ensure the quality of our AI models and the responses we receive? It sounds like there could be challenges of having an AI model that’s isolated and may not be able to be updated due to limited communications.

McLarney: I think one of the really important things we all can do with AI, as it’s having this resurgence and really growing in popularity, is try it out and see where it gives you good results and see where it gives you bad results, and share both of those categories with one another.

I’m sure we’ve all seen funny examples of how generative AI generates new content based on its training and the queries that you give it. We’ve all seen really funny examples of how it will make mistakes. It will hallucinate.

To me, we learn through sharing both the successes and the failures and even the funny failures there, and that helps us tune and train our AI systems better. But you know if, if you or I are not actually the ones that are training ChatGPT, for example. Industry is training that, but if we get better at interacting with it, entering our prompts, knowing how to ask a question better, or how to give the right context better for a given question, we can learn on the human side, to get more out of the AI systems and get better answers and less hallucinations.

At the same time, you know, those who create the models are working diligently to make them better and better. Yeah, it’s a great question about you know, if you deploy an AI system and you can’t just let it go. You can’t just deploy it and let it go. Because there’s a thing called model drift, where, you know, the AI may start out with decent answers, and along the way, it may do great. So one element of deployment is continually checking your system, keeping human judgment, you know, keeping human in the loop.

You think about some of NASA’s missions. We’ve got Voyager still sending back signals after more than 40 years. We need to come up with ways to track and update the AI systems on very far distant capabilities. One way you could do that is, you know, humans could, could keep doing a system check. And maybe you deploy a system upgrade to something that’s in flight, even, you know, well beyond the solar system. Another potential technique could be what if you have the main AI engine for, for a space probe, and then you’ve got, like, it’s little conscience AI, which is an add on so one AI would be checking the other one. That’s still probably science fiction at this point, but it’s a concept that might work. I think what works for the time being is keeping humans in the loop. But maybe someday one AI could be supervising another one, and another one.

Host: What do you consider to be your giant leap?

McLarney: From probably third grade on, I’ve been a huge NASA fan, space enthusiast, science fiction enthusiast, went through my teenage years just consuming all the science fiction reading that I could, some of that it gave, gave me and my family upbringing gave me this sense of service. And so I actually went to military school. I was commissioned as an officer engineer in the United States Army, and wound up making that a career. After that, I was a contractor for about a year, and then had the opportunity to come to NASA and to me that my giant leap was reinventing myself after that military career and just having an amazing time helping with a wide variety of transformation at NASA, to include worming my way into artificial intelligence.

So, maybe eight or 10 years ago, I had a colleague who started a data science team at Langley Research Center, and she and I worked hand-in-hand on a lot of local transformation at Langley. She wound up retiring. I inherited that data science team and kept it going. And then, along with that, NASA digital transformation was coming along, and I was lucky enough to be asked to lead the AI element in digital transformation. Nut the big leap for me was translating all that military experience and kind of taking a leap of faith. And truthfully, the NASA leaders who hired me, they took a leap of faith that this Army guy could come and do something useful at NASA. And I feel really lucky, to have been able to do that.

And really, it’s neat for me to be able to help NASA transform really dig into AI, and I hope when I’m done, people will be able to say that I help them a little bit because my entire objective is to help people, especially helpful transformation.

Host: I have no doubt about that. Thanks for your time, Ed.

McLarney: Thanks very much, Andrés. Great talking with you.

Host: That’s it for this episode of Small steps, Giant Leaps. For more on Ed and the topics discussed today, visit our resource page at appel.nasa.gov. That’s A-P-P-E-L dot NASA dot gov. And don’t forget to check our other podcasts like “Houston, We Have a Podcast” and “Curious Universe.”