Flights Help ‘Teach’ Drones to Navigate the World

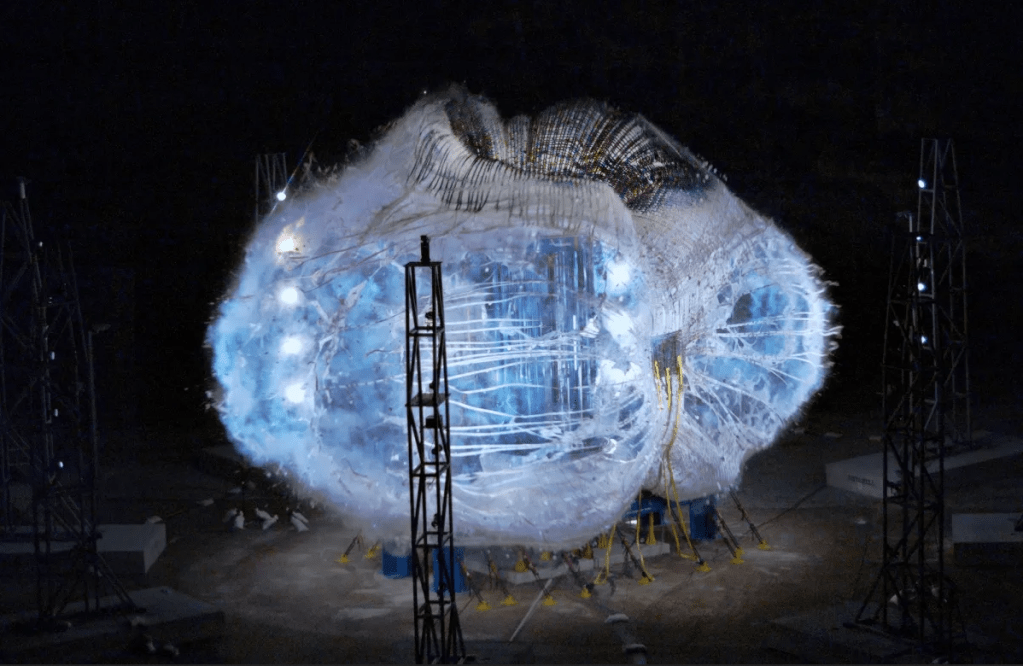

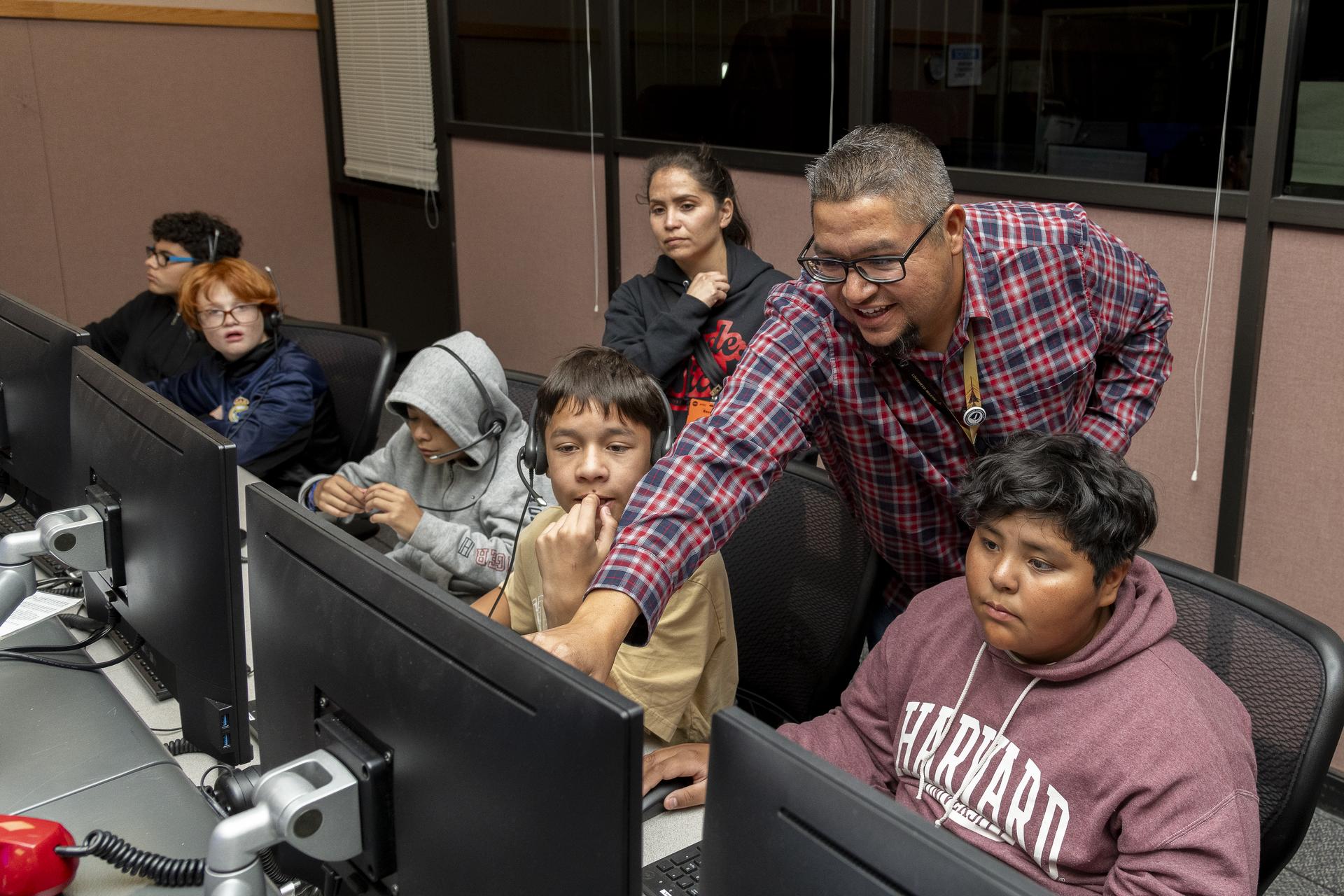

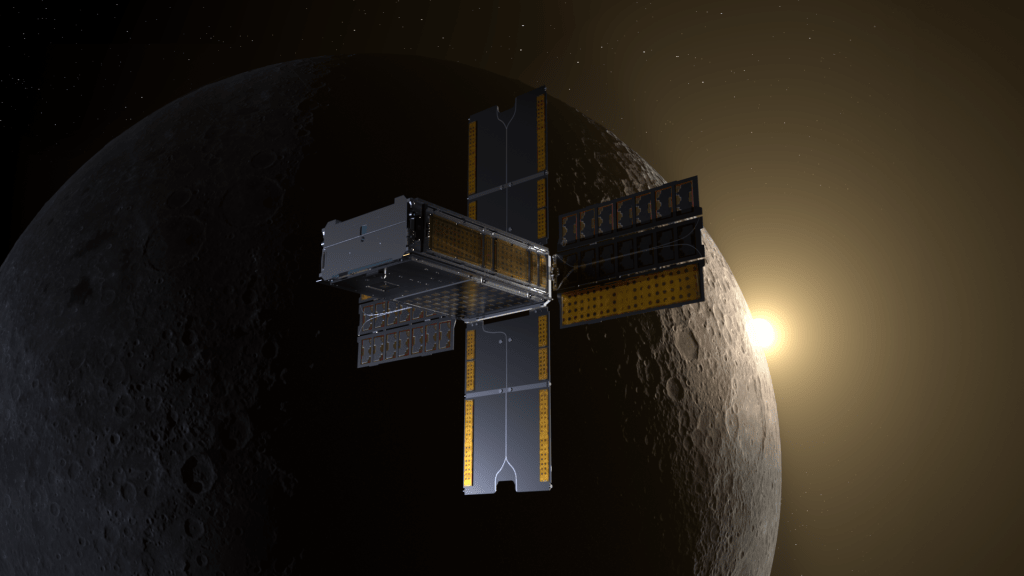

If small, unmanned aircraft could be made smarter and more independent, they could help firefighters and other responders do even more in the wake of natural disasters. In December 2021, NASA’s Scalable Traffic Management for Emergency Response Operations activity, or STEReO, ran early flight tests of technology the team is developing, at NASA’s Ames Research Center in California’s Silicon Valley. Data collected during the flights will help “teach” unmanned aircraft systems – also called UAS or drones – how to understand their surroundings and navigate the world. This could be a real benefit for emergency response; if UAS could fly and land safely beyond their pilot’s view, they could carry out certain tasks on their own, like visually identifying, then mapping, a wildfire perimeter. During the tests, a large quadcopter UAS flew at low altitude, between about 100 and 150 feet. It carried several different sensors, including a camera and lidar scanner, that collected data to later train the aircraft’s “brain.” The goal is for future vehicles to react to their environment in real time, for instance to detect and avoid obstacles in flight. Researchers are developing software that can be taught how to interpret what an aircraft’s sensors perceive and what actions to take in response – a type of machine learning. STEReO’s data, captured from the real world around buildings, trees, and other physical features, will make that training easier. STEReO aims to support the modernization of emergency response by scaling up the role of unmanned aircraft and helping operations adapt to rapidly changing conditions during a disaster. The recent tests will help give a UAS the ability to conduct a mission autonomously, even if the vehicle is beyond the pilot’s view or doesn’t have a GPS signal. So, these small, agile aircraft will still be able to navigate in remote or disaster-struck areas without connectivity. In more urban settings, there may be different challenges and requirements for autonomous aircraft, including for passenger vehicles. Another NASA project will use the data from the recent flight tests to help develop similar perception capabilities for use in cities. The Transformational Tools and Technologies project's Autonomous Systems subproject is focused on giving these future aircraft enough information to make decisions about their environment so that a human operator only needs to interact with the flight at a high level. That might mean issuing a command telling the vehicle to return to base, rather than taking direct control and flying it back. This could allow a person to oversee multiple flights at once, making it easier to scale up the use of these aircraft for Advanced Air Mobility in the future airspace. Author: Abby Tabor, NASA's Ames Research Center

- X