When it comes to making real-time decisions about unfamiliar data – say, choosing a path to hike up a mountain you’ve never scaled before – existing artificial intelligence and machine learning tech doesn’t come close to measuring up to human skill. That’s why NASA scientist John Moisan is developing an AI 'eye'.

When it comes to making real-time decisions about unfamiliar data – say, choosing a path to hike up a mountain you’ve never scaled before – existing artificial intelligence and machine learning tech doesn’t come close to measuring up to human skill. That’s why NASA scientist John Moisan is developing an AI “eye.”

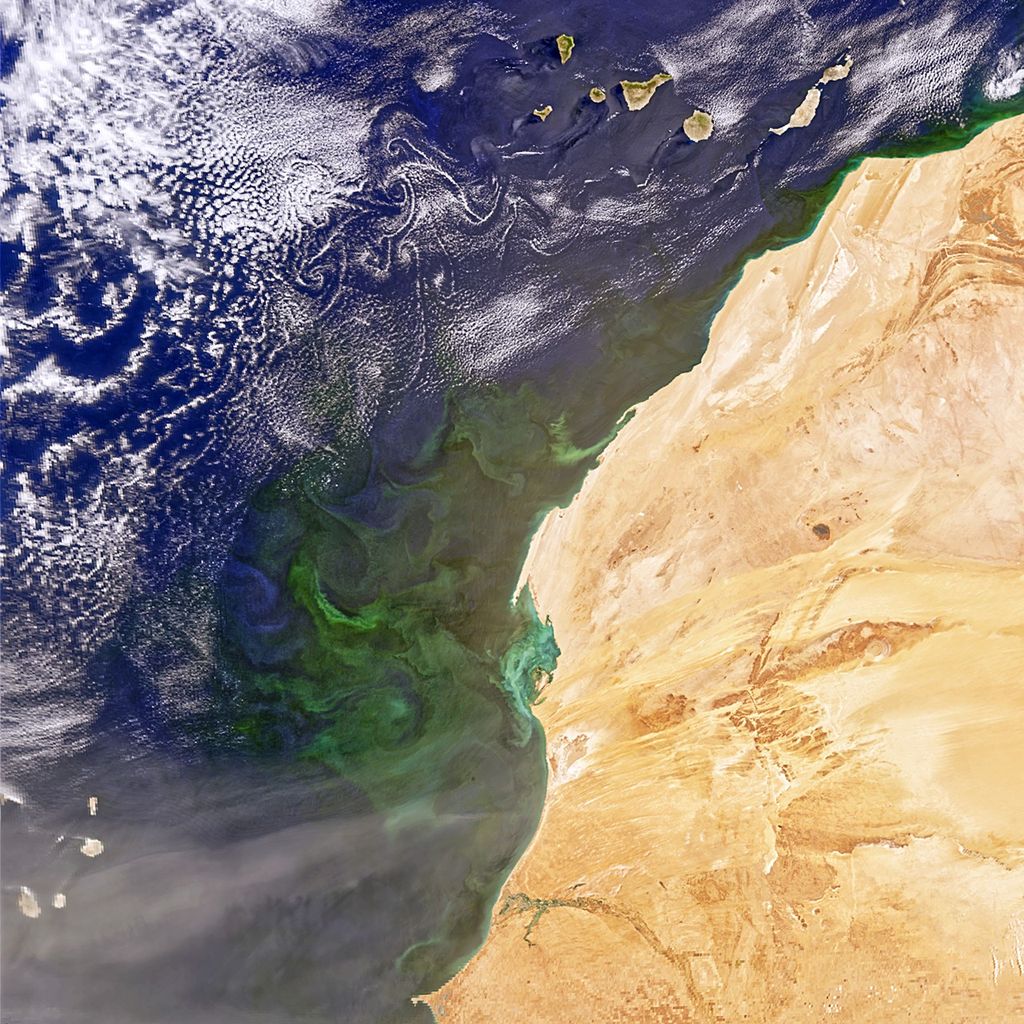

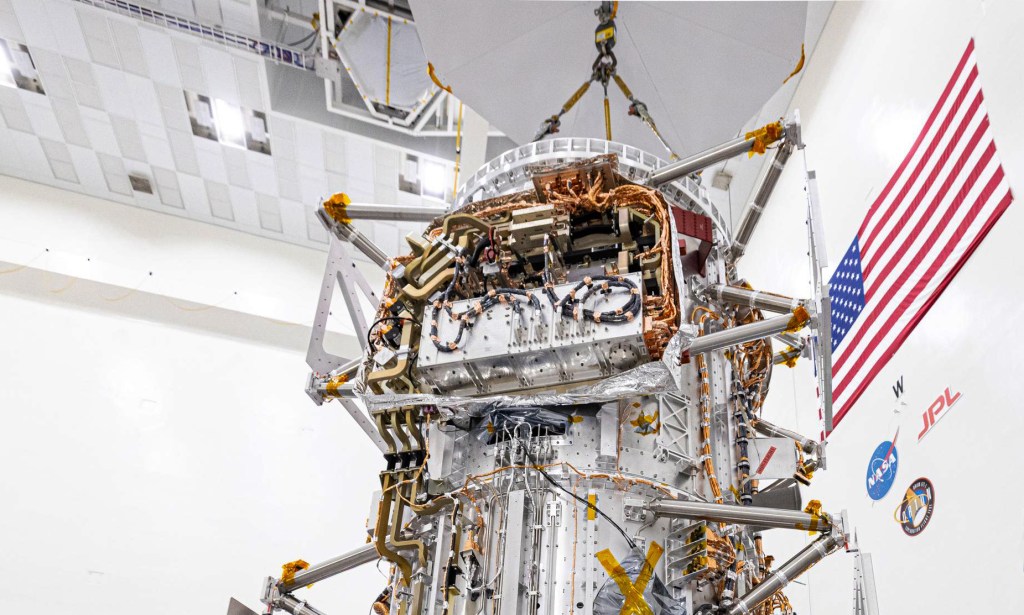

Moisan, an oceanographer at NASA’s Wallops Flight Facility near Chincoteague, Virginia, said AI will direct his A-Eye, a movable sensor. After analyzing images his AI would not just find known patterns in new data, but also steer the sensor to observe and discover new features or biological processes.

“A truly intelligent machine needs to be able to recognize when it is faced with something truly new and worthy of further observation,” Moisan said. “Most AI applications are mapping applications trained with familiar data to recognize patterns in new data. How do you teach a machine to recognize something it doesn’t understand, stop and say ‘What was that? Let’s take a closer look.’ That’s discovery.”

Finding and identifying new patterns in complex data is still the domain of human scientists, and how humans see plays a large part, said Goddard AI expert James MacKinnon. Scientists analyze large data sets by looking at visualizations that can help bring out relationships between different variables within the data.

It’s another story to train a computer to look at large data streams in real time to see those connections, MacKinnon said. Especially when looking for correlations and inter-relationships in the data that the computer hasn’t been trained to identify.

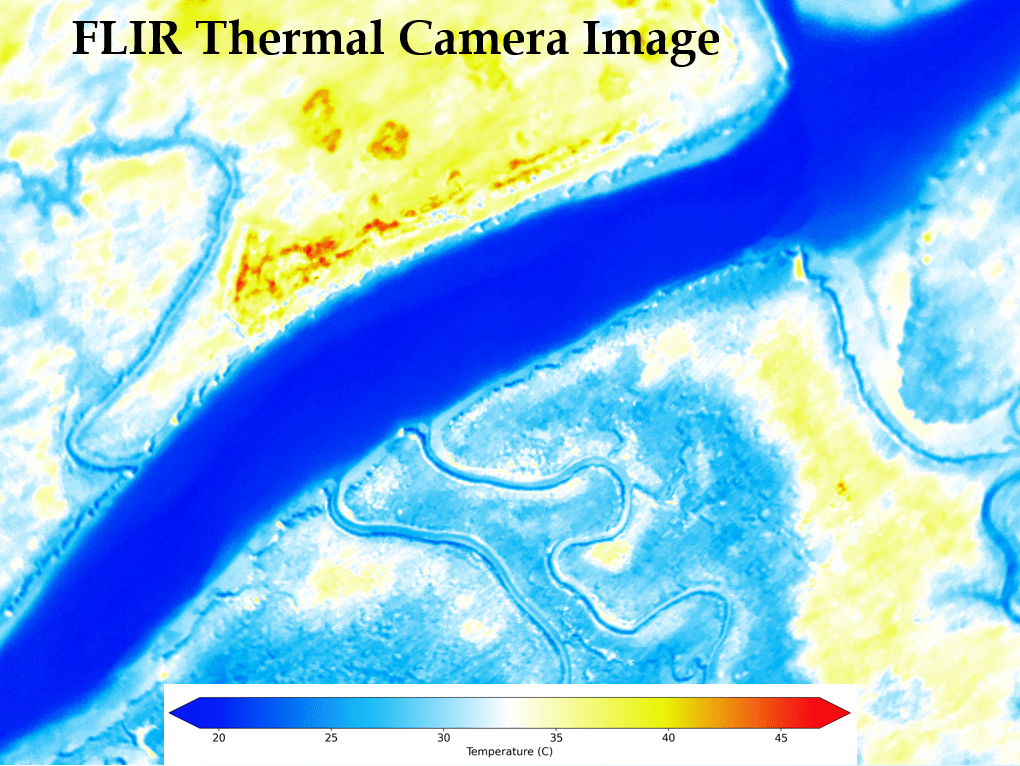

Moisan intends first to set his A-Eye on interpreting images from Earth’s complex aquatic and coastal regions. He expects to reach that goal this year, training the AI using observations from prior flights over the Delmarva Peninsula. Follow-up funding would help him complete the optical pointing goal.

“How do you pick out things that matter in a scan?” Moisan asked. “I want to be able to quickly point the A-Eye at something swept up in the scan, so that from a remote area we can get whatever we need to understand the environmental scene.”

Moisan’s on-board AI would scan the collected data in real-time to search for significant features, then steer an optical sensor to collect more detailed data in infrared and other frequencies.

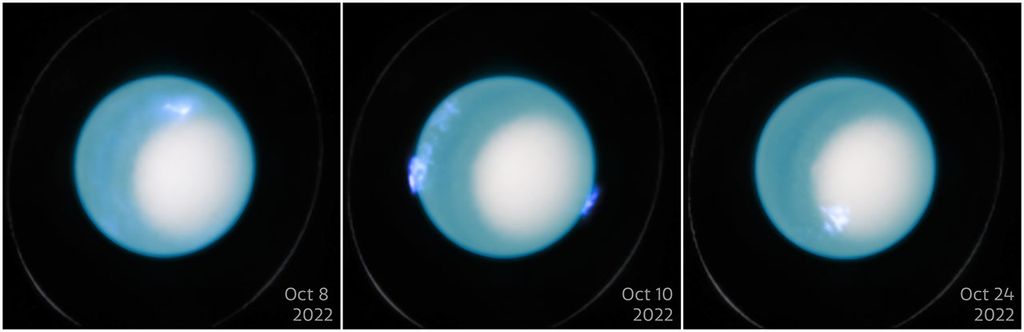

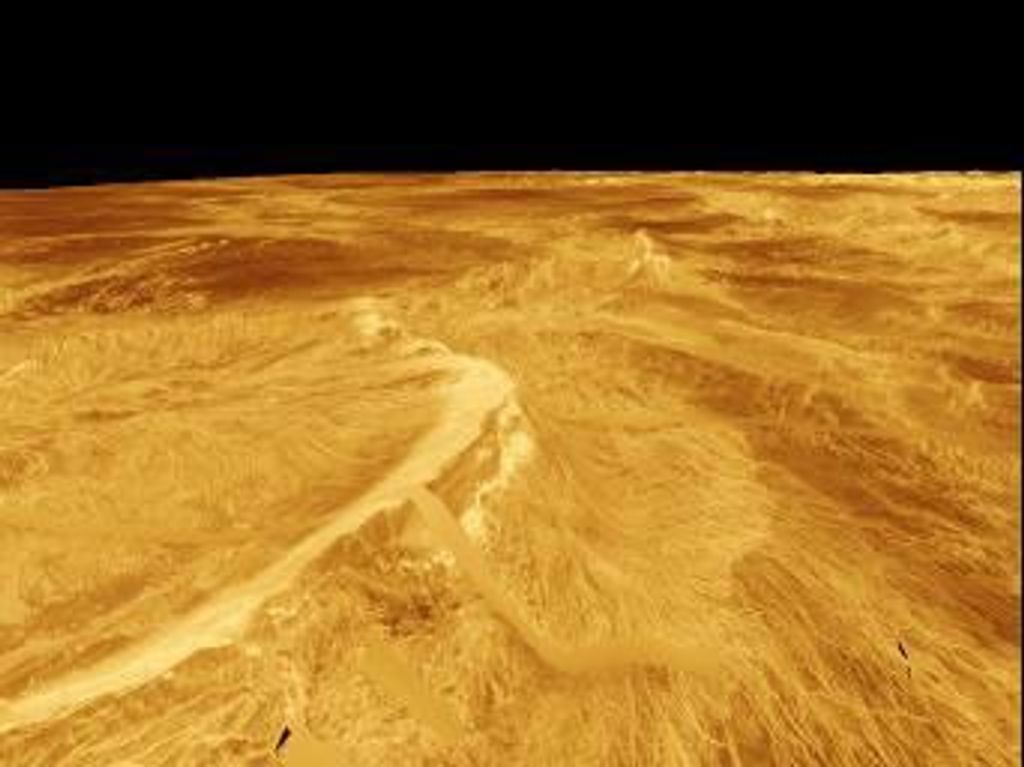

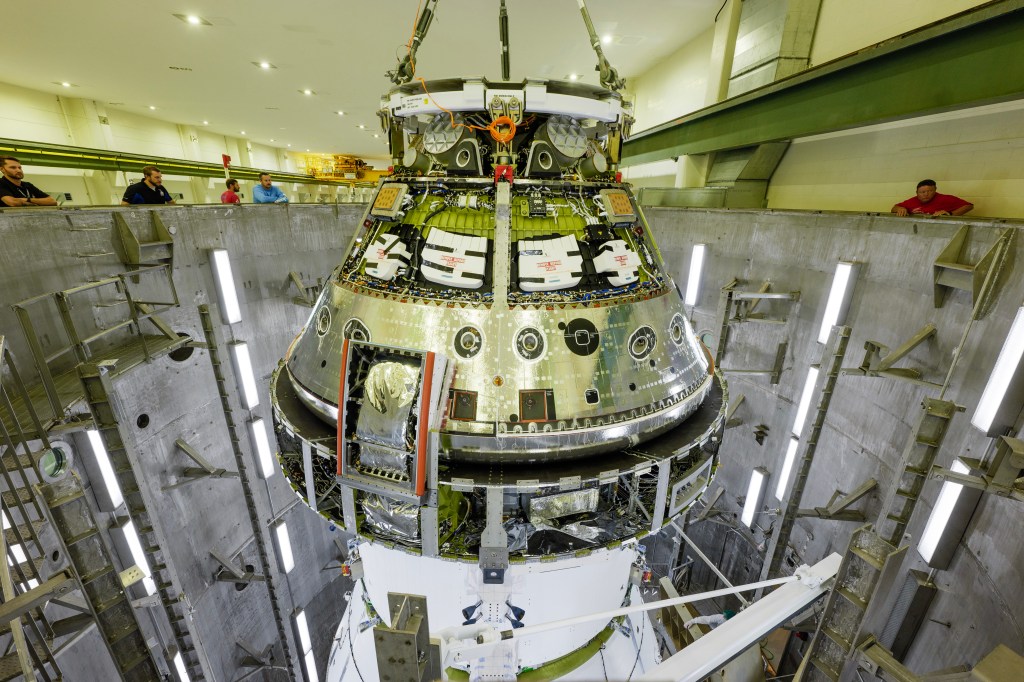

Thinking machines may be set to play a larger role in future exploration of our universe. Sophisticated computers taught to recognize chemical signatures that could indicate life processes, or landscape features like lava flows or craters, might offer to increase the value of science data returned from lunar or deep-space exploration.

Today’s state-of-the-art AI is not quite ready to make mission-critical decisions, MacKinnon said.

“You need some way to take a perception of a scene and turn that into a decision and that’s really hard,” he said. “The scary thing, to a scientist, is to throw away data that could be valuable. An AI might prioritize what data to send first or have an algorithm that can call attention to anomalies, but at the end of the day, it’s going to be a scientist looking at that data that results in discoveries.”