If you’ve seen dental plaque or pond scum, you’ve met a biofilm. Among the oldest forms of life on Earth, these ubiquitous, slimy buildups of bacteria grow on nearly everything exposed to moisture and leave behind common tell-tale textures and structures identifying them as living or once-living organisms.

Without training and sophisticated microscopes, however, these biofilms can be difficult to identify and easily confused with textures produced by non-biological and geological processes.

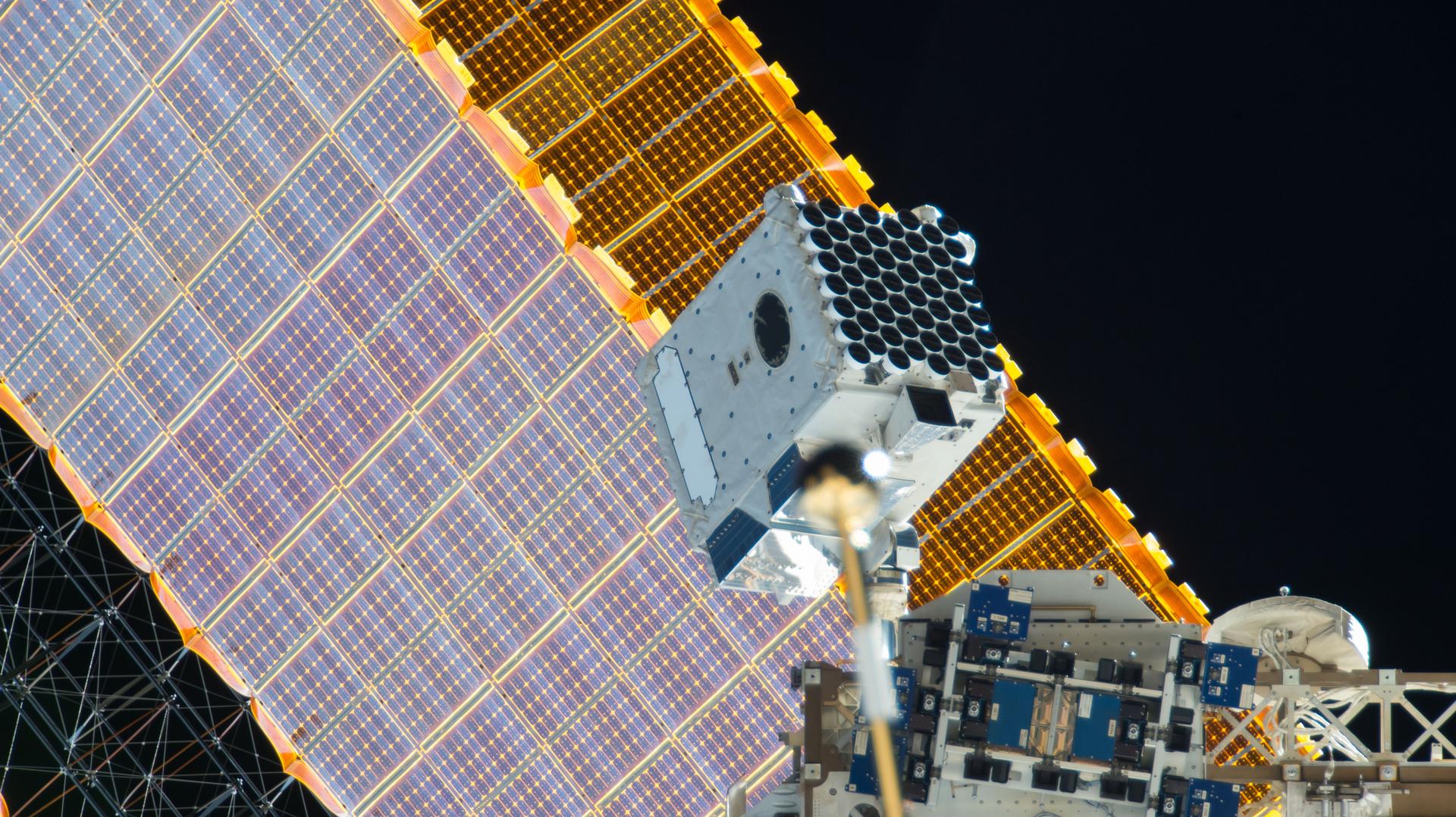

A team of NASA engineers and scientists at the Goddard Space Flight Center in Greenbelt, Maryland, has launched a pilot project teaching algorithms to autonomously recognize and classify biofabrics, textures in rocks created by living organisms. The idea would be to equip a rover with these sophisticated imaging and data-analysis technologies and allow the instruments to decide in real-time which rocks to sample in the search for life, regardless of how primitive, on the Moon or Mars.

The multidisciplinary project, led by Goddard materials engineer Ryan Kent, is harnessing the power of machine learning — considered a subset of artificial intelligence — where computer processors are equipped with algorithms that, like humans, learn from data, but faster, more accurately, and with less intrinsic bias.

Used ubiquitously by all types of industries, including credit card companies searching for potentially fraudulent transactions, machine learning gives processors the ability to search for patterns and find relationships in data with little or no prompting from humans.

In recent months, Goddard researchers have begun investigating ways NASA could benefit from machine-learning techniques. Their projects run the gamut, everything from how machine learning could help in making real-time crop forecasts or locating wildfires and floods to identifying instrument anomalies.

Other NASA researchers are tapping into these techniques to help identify hazardous Martian and lunar terrain, but no one is applying artificial intelligence to identify biofabrics in the field, said Heather Graham, a University of Maryland researcher who works at Goddard’s Astrobiology Analytical Laboratory and is the brainchild behind the project.

The possibility that these organisms might live or had once lived beneath or on the surface of Mars is possible. NASA missions discovered gullies and lake beds, indicating that water once existed on this dry, hostile world. The presence of water is a prerequisite for life, at least on Earth. If life took form, their fossilized textures could be present on the surfaces of rocks. It’s even possible that life could have survived on Mars below the surface, judging from some microbes on Earth that thrive miles underground.

“It can take a couple hours to receive images taken by a Mars rover, even longer for more distant objects,” Graham said, adding that researchers examine these images to determine whether to sample it. “Our idea of equipping a rover with sophisticated imaging and machine-learning technologies would give it some autonomy that would speed up our sampling cadence. This approach could be very, very useful.”

Teaching the Algorithms

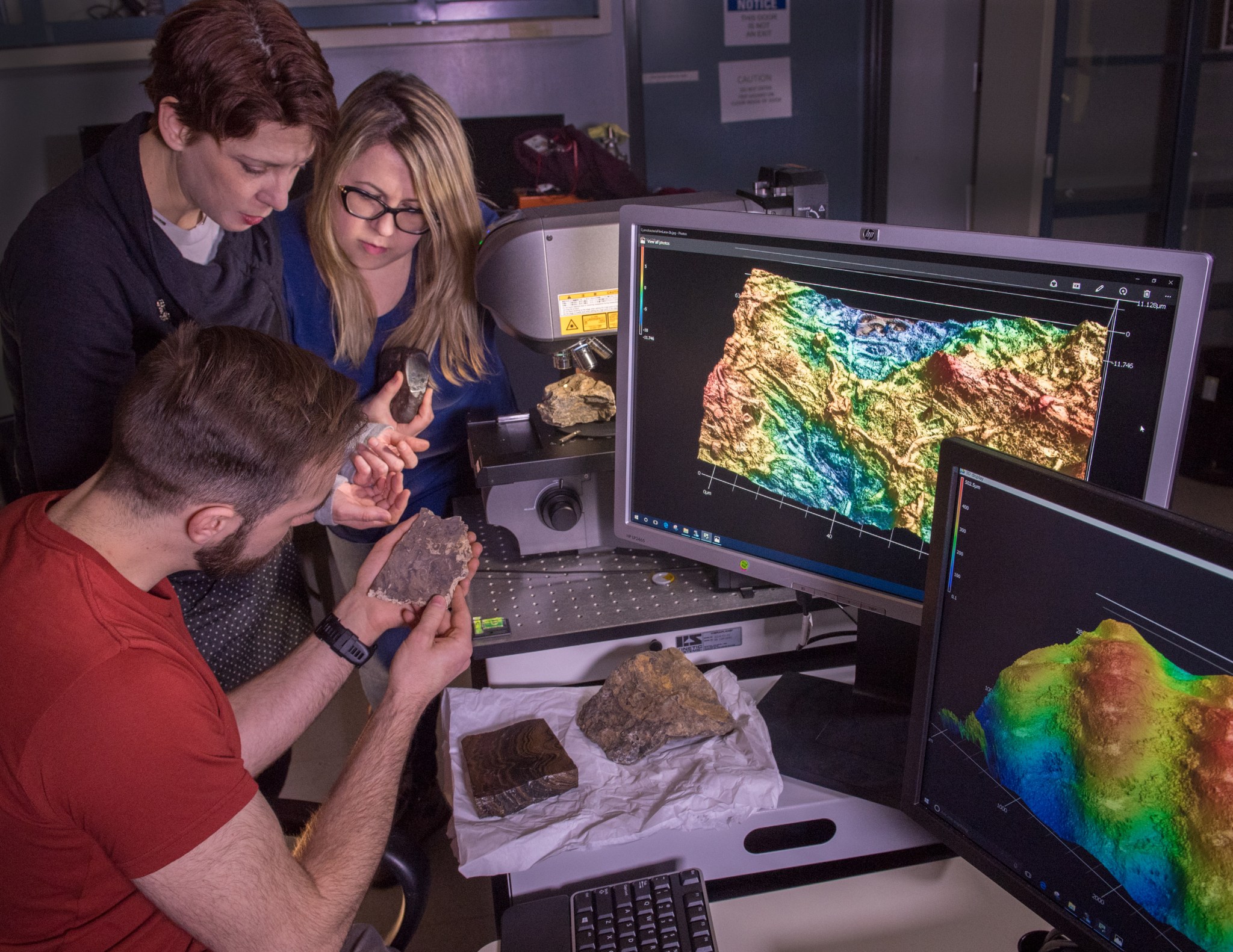

Teaching algorithms to identify biofabrics begins first with a laser confocal microscope, a powerful tool that provides high-resolution, high-contrast images of three-dimensional objects. Recently acquired by Goddard’s Materials Branch, the microscope is typically used to analyze materials used in spaceflight applications. While rock scanning wasn’t the reason Goddard bought the tool, it fits the bill for this project due to its ability to acquire small-scale structure.

Kent scans rocks known to contain biofabrics and other textures left by life as well as those that don’t. Machine-learning expert Burcu Kosar and her colleague, Tim McClanahan, plan to take those high-resolution images and feed them into commercially available machine-leaning algorithms or models already used for feature recognition.

“This is a huge, huge task. It’s data hungry,” Kosar said, adding that the more images she and McClanahan feed into the algorithms, the greater the chance of developing a highly accurate classifying system that a rover could use to identify potential lifeforms. “The goal is to create a functional classifier, combined with a good imager on a rover.”

The project is now being supported by Goddard’s Internal Research and Development program and is a follow-on to an effort Kent initially started in 2018 under another Goddard research program.

“What we did was take a few preliminary studies to determine if we could see different textures on rocks. We could see them,” Kent said. “This is an extension of that effort. With the IRAD, we’re taking tons of images and feeding them into the machine-learning algorithms to see if they can identify a difference. This technology holds a lot of promise.”

For more Goddard technology news, go to: https://www.nasa.gov/wp-content/uploads/2019/06/spring_2019_final_web_version.pdf?emrc=188e7d

By Lori Keesey

NASA’s Goddard Space Flight Center