Things look different on the Moon. Literally.

Because the Moon isn’t big enough to hold a significant atmosphere, there is no air and there are no particles in the air to reflect and scatter sunlight. On Earth, shadows in otherwise bright environments are dimly lit with indirect light from these tiny reflections. That lighting provides enough detail that we get an idea of shapes, holes and other features that could be obstacles to someone – or some robot – trying to maneuver in shadow.

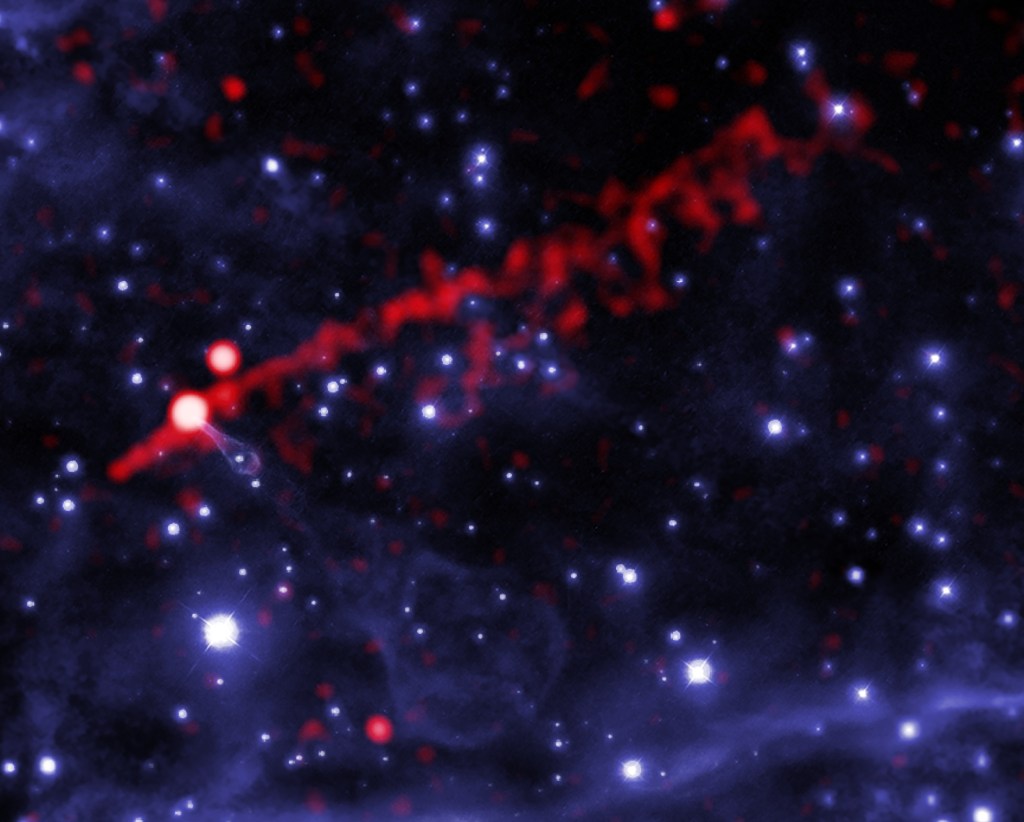

“What you get on the Moon are dark shadows and very bright regions that are directly illuminated by the Sun – the Italian painters in the Baroque period called it chiaroscuro – alternating light and dark,” said Uland Wong, a computer scientist at NASA’s Ames Research Center in Silicon Valley. “It’s very difficult to be able to perceive anything for a robot or even a human that needs to analyze these visuals, because cameras don’t have the sensitivity to be able to see the details that you need to detect a rock or a crater.”

In addition, the dust itself covering the Moon is otherworldly. The way light reflects on the jagged shape of individual grains, along with the uniformity of color, means it looks different if it’s lit from different directions. It loses texture at different lighting angles.

Some of these visual challenges are evident in Apollo mission surface images, but the early lunar missions mostly waited until lunar “afternoon” so astronauts could safely explore the surface in well-lit conditions.

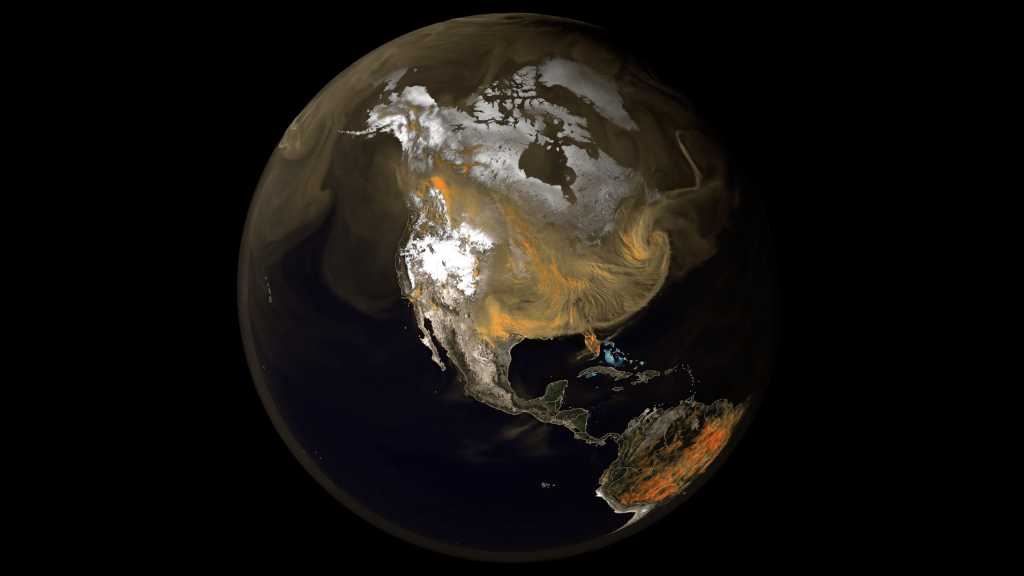

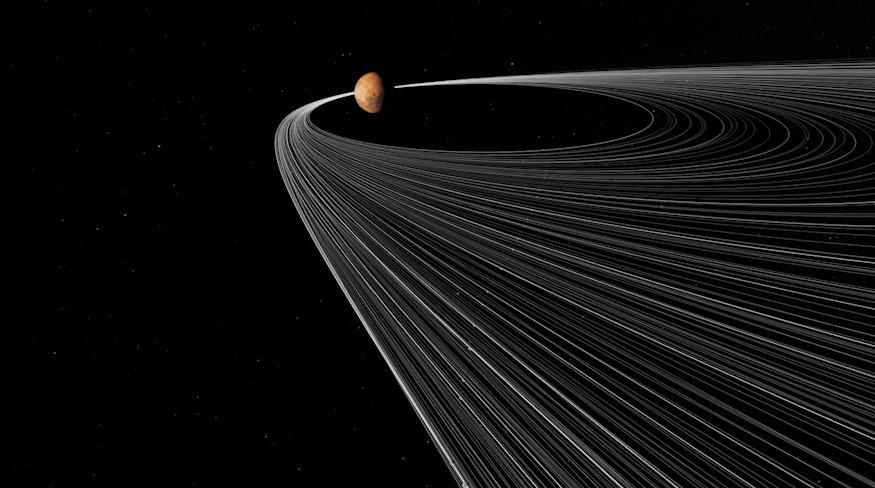

Future lunar rovers may target unexplored polar regions of the Moon to drill for water ice and other volatiles that are essential, but heavy, to take on human exploration missions. At the Moon’s poles, the Sun is always near the horizon and long shadows hide many potential dangers in terrain like rocks and craters. Pure darkness is a challenge for robots that need to use visual sensors to safely explore the surface.

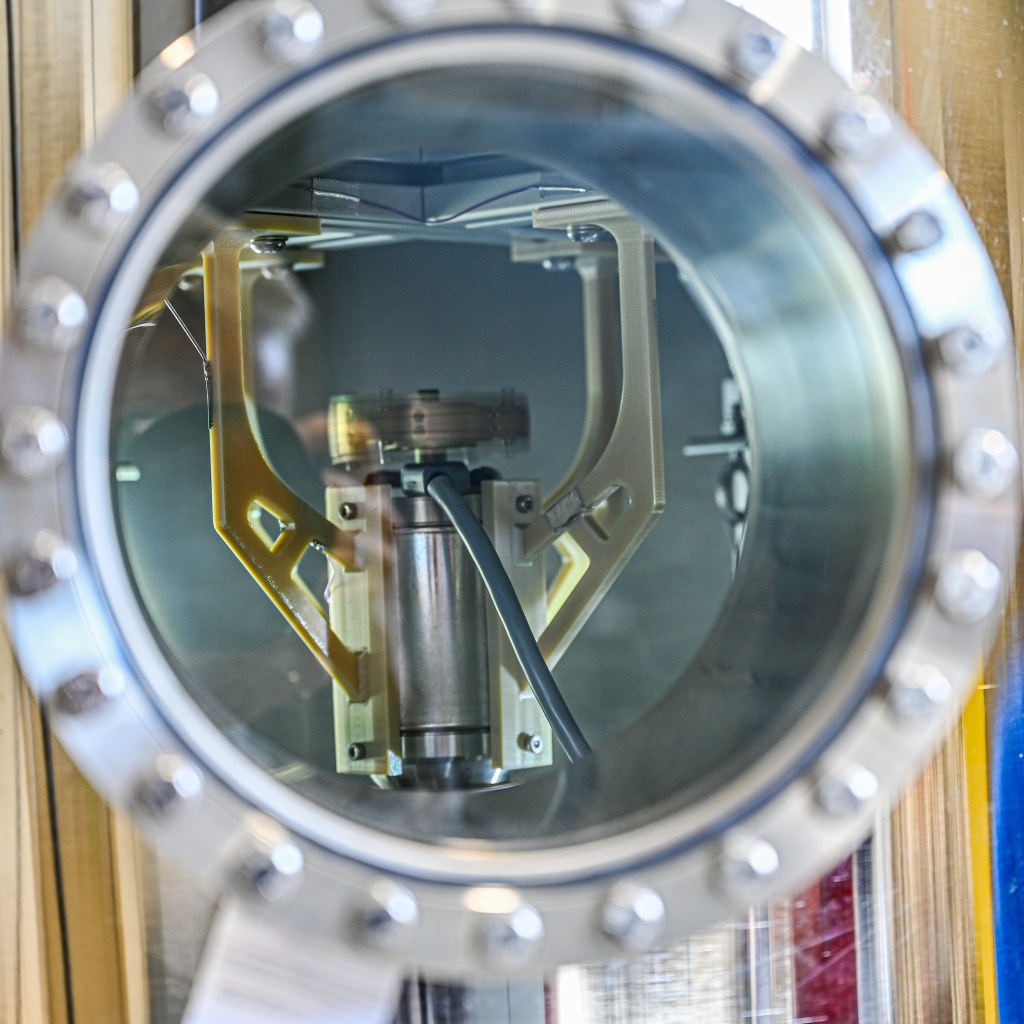

Wong and his team in Ames’ Intelligent Robotics Group are tackling this by gathering real data from simulated lunar soil and lighting.

“We’re building these analog environments here and lighting them like they would look on the Moon with solar simulators, in order to create these sorts of appearance conditions,” said Wong. “We use a lot of 3-dimensional imaging techniques, and use sensors to create algorithms, which will both help the robot safeguard itself in these environments, and let us train people to interpret it correctly and command a robot where to go.”

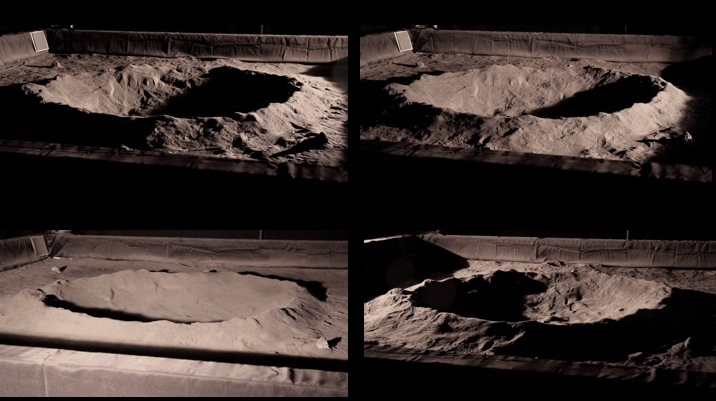

The team uses a ‘Lunar Lab’ testbed at Ames – a 12-foot-square sandbox containing eight tons of JSC-1A, a human-made lunar soil simulant. Craters, surface ripples and obstacles are shaped with hand tools, and rocks are added to the terrain in order to simulate boulder fields or specific obstacles. Then they dust the terrain and rocks with an added layer of simulant to produce the “fluffy” top layer of lunar soil, erasing shovel and brush marks, and spreading a thin layer on the faces of rocks. Each terrain design in the testbed is generated by statistics based on common features observed from spacecraft around the Moon.

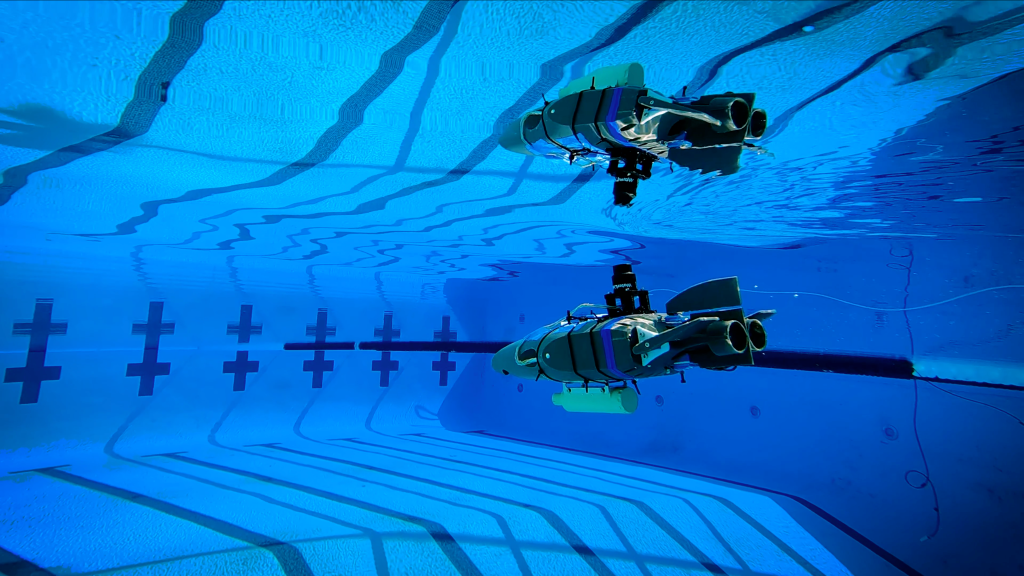

Solar simulator lights are set up around the terrain to create Moon-accurate low-angle, high-contrast illumination. Two cameras, called a stereo imaging pair, mimic how human eyes are set apart to help us perceive depth. The team captured photographs of multiple testbed setups and lighting angles to create a dataset to inform future rover navigation.

“But you can only shovel so much dirt; we are also using physics-based rendering, and are trying to photo-realistically recreate the illumination in these environments,” said Wong. “This allows us to use a supercomputer to render a bunch of images using models that we have decent confidence in, and this gets us a lot more information than we would taking pictures in a lab with three people, for example.”

The result, a Polar Optical Lunar Analog Reconstruction or POLAR dataset, provides standard information for rover designers and programmers to develop algorithms and set up sensors to safely navigate. The POLAR dataset is applicable not only to our Moon, but to many types of planetary surfaces on airless bodies, including Mercury, asteroids, and regolith-covered moons like Mars’ Phobos.

So far, early results show that stereo imaging is promising for use on rovers that will explore the lunar poles.

“One of the mission concepts that’s in development right now, Resource Prospector, that I have the privilege of working on, might be the first mission to land a robot and navigate in the polar regions of the Moon,” said Wong. “And in order to do that, we have to figure out how to navigate where nobody’s ever been.”

This research is funded by the agency’s Advanced Exploration Systems and Game Changing Development programs. NASA’s Solar System Exploration Research Virtual Institute provides the laboratory facilities and operational support.

For more information about NASA technology for future exploration missions, visit:

https://www.nasa.gov/technology

Click here to listen to an audio version of this feature on the “NASA in Silicon Valley” podcast.