Foundation Models

What is a Foundation Model?

A Foundation Model (FM) refers to a diverse class of very large, AI/ML models that are trained on broad, unlabeled data and can be readily used as a basis for creating more specialized models efficiently. FMs can use techniques such as self-supervision (a form of unsupervised learning) and many are built around deep-learning neural networks. An example of an FM, well known to the public, is the large-language model (LLM) GPT. A thorough discussion of the promises and challenges of foundation models can be found at the Stanford Center for Research on Foundation Models here.

In this context, broad data usually means very large datasets that have a wide range of sources (e.g., written text or images). Self-supervision can involve approaches such as Transformers (described here: “Attention is all you need”) that enable differential weighting, or focus of attention, on the significant, or semantically important, parts of input data.

In effect, a foundation model builds a new representation (often called a latent representation, or compression) of data that can then be used to target more specific questions or processes. A foundation model can be expensive to build, but may yield many new uses with only a little extra fine-tuning with small, specific datasets.

For example, large-language models (LLMs) build a representation of enormous amounts of text. That representation of the data is much more efficiently queried, and can, with the right approach, pass natural language text prompts through to generate natural language outputs. Because the training is broad, this representation can be used as the basis for a more finely tuned model to address specific needs. E.g., an LLM can be fine-tuned using scientific literature and then generate synopses or collated comparisons of literature with user prompts, or respond to prompts when a user presents the LLM with new text (e.g., a new paper). AstroLLaMA is an example of a fine-tuned science model derived from LLM foundation models that fine-tunes using 300,000 astronomy paper abstracts.

For example, large-language models (LLMs) build a representation of enormous amounts of text. That representation of the data is much more efficiently queried, and can, with the right approach, pass natural language text prompts through to generate natural language outputs. Because the training is broad, this representation can be used as the basis for a more finely tuned model to address specific needs. E.g., an LLM can be fine-tuned using scientific literature and then generate synopses or collated comparisons of literature with user prompts, or respond to prompts when a user presents the LLM with new text (e.g., a new paper). AstroLLaMA is an example of a fine-tuned science model derived from LLM foundation models that fine-tunes using 300,000 astronomy paper abstracts.

Scientific Foundation Models

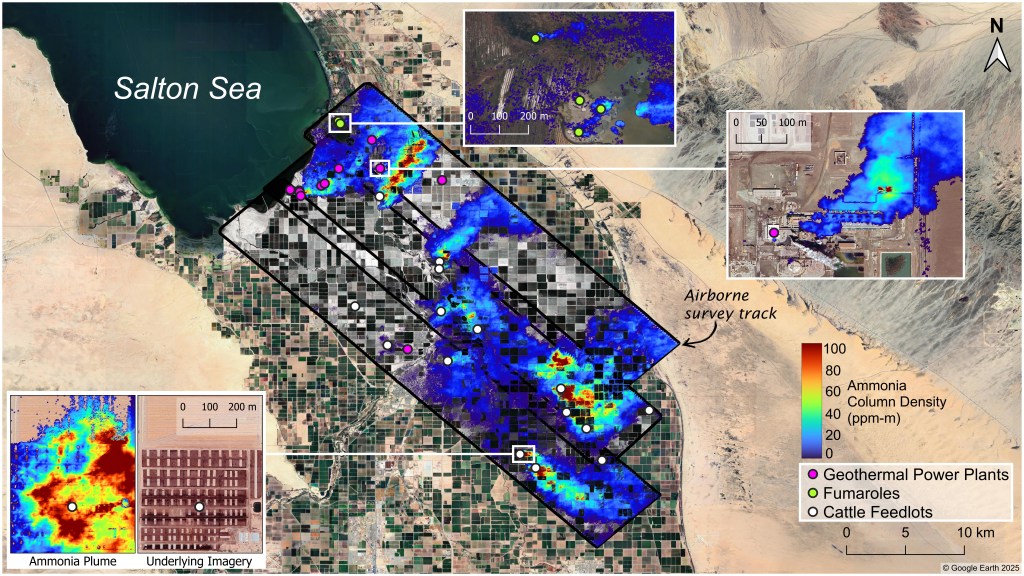

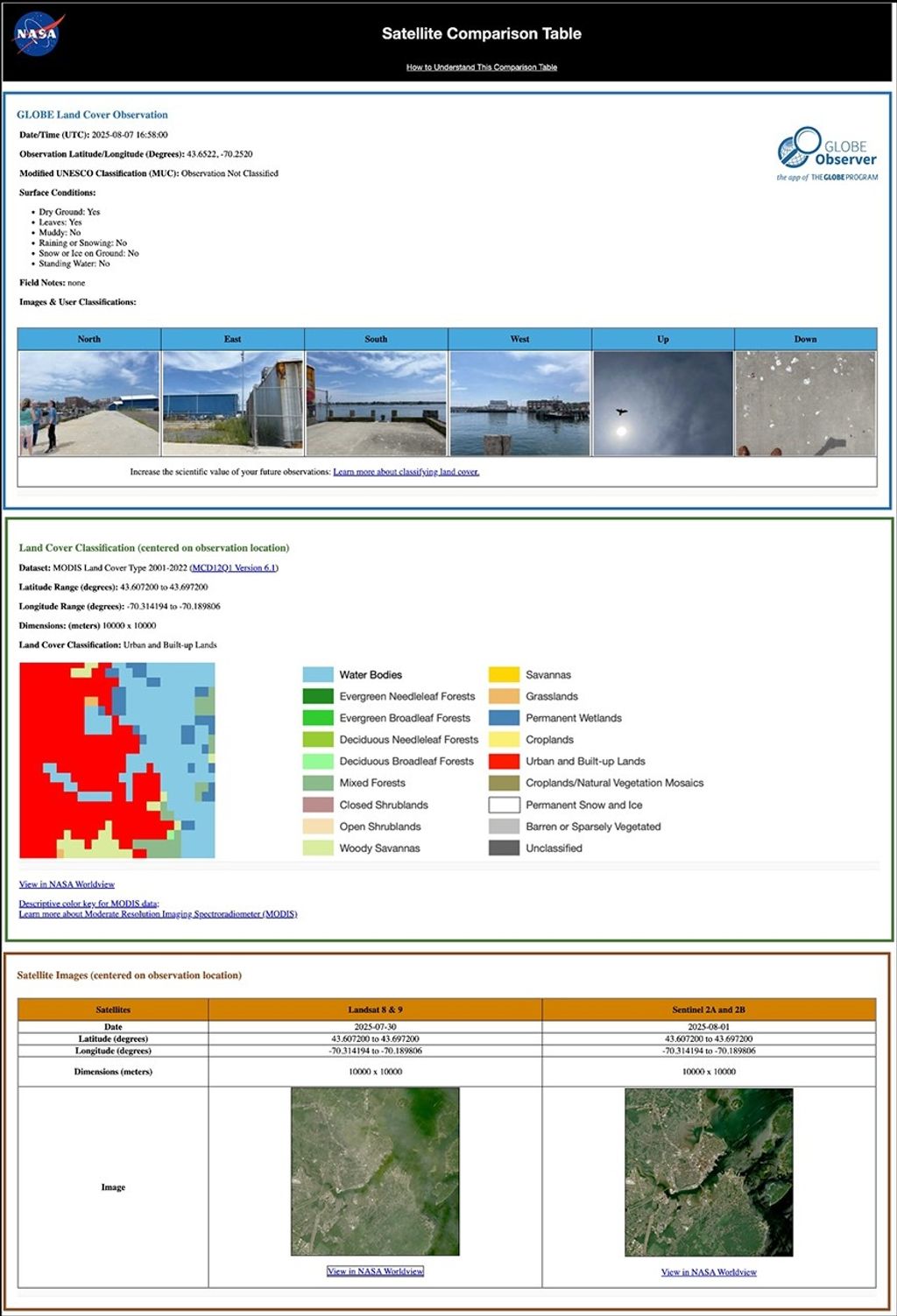

NASA and IBM have partnered to use NASA’s Harmonized Landsat Sentinel-2 (HLS) data to build a geospatial foundation model using a temporal visual transformer (Prithvi-100M). The unlabeled, large surface reflectance dataset can be fine-tuned for studies of flood water detection, fire burn scars, and many more uses (see the technical paper here).

Work in astrophysics has produced a foundation-like model representation of galaxy morphology data that can be fine-tuned with a smaller dataset from a specific project, in the Zoobot model. Another example is a foundation model for radio astronomy, using data on galaxies, or a foundation model for stellar data.

Several dozen foundation models exist in the general biosciences, including the well-known Alphafold model for protein folding. Work is being carried out towards FMs for particle physics, such as OmniJet-alpha.

A growing number of FM projects are described in the foundation model topic at GitHub.