A new photogrammetry technique has been developed that allows accurate six degrees of freedom (6-DOF) orientation measurements to be made of large, moving rigid bodies.

This technique can provide data of vehicle dynamics or motions with accuracy equal to or better than inertial measurement units and has been used on multiple NESC-sponsored projects. Maximum flexibility is provided in choosing the number of cameras, the types of lenses, and the placement of those cameras in accessible locations for a flight experiment or ground test facility without overlapping camera view constraints.

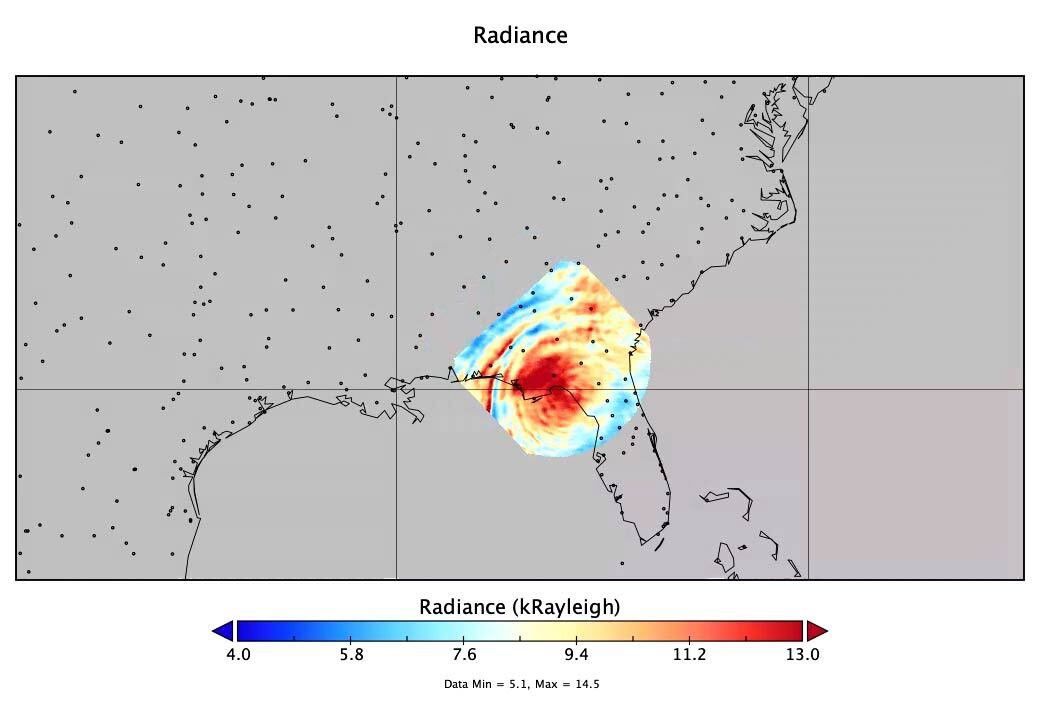

The technique was developed to measure the separation of a full-scale Orion crew module (CM) from its protective forward fairing during NASA’s Max Launch Abort System (MLAS) flight test. Photogrammetric analysis of the 6-DOF separation was a critical measurement for comparison with inertial measurement units. Conventional photogrammetry techniques require low-distortion lenses and overlapping camera views of the object of interest and could not be used on the MLAS test due to space constraints. Thus the new algorithm was developed, which used fish-eye lenses and non-overlapping views. Later, the technique was used to accurately measure the position and attitude of a full-scale Orion CM during water entry testing at various entry angles and velocities. Again, multiple high-speed cameras were used with views that did not fully overlap. The algorithm was extended to accommodate different camera lenses, calibration methods, and more automated processing. Over 60 drops were recorded and processed.

Photogrammetric targets are first applied to the body and surveyed. Given that each camera observes a separate subset of the photogrammetric targets on the object of interest, if all those targets are constrained to the same rigid body, then a representative set of equations can be developed. The solution to the equations is the one unique 6-DOF orientation of the rigid object that results in the target patterns observed in all the cameras. Modern camera calibration techniques were employed by capturing and analyzing numerous images of objects with well-known structure, such as arrays of regularly spaced dots or squares. Finally, a robust target-tracking algorithm was implemented, which could follow targets from frame to frame, even in the midst of significant flying debris or water droplets. All these technologies were combined into a MATLAB/LabVIEW platform, which could be used at the test site immediately after an experiment. Measurement accuracy of the CM/fairing relative positions was to within 0.50 inch even after 16 feet of separation. Displacements with millimeter accuracy and attitude within hundredths of a degree were obtained for the water entry tests.

For more information, please contact Kurt Severance (kurt.severance@nasa.gov)